[Preview] v1.81.14 - New Gateway Level Guardrails & Compliance Playground

Deploy this version

- Docker

- Pip

docker run \

-e STORE_MODEL_IN_DB=True \

-p 4000:4000 \

ghcr.io/berriai/litellm:main-v1.81.14.rc.1

pip install litellm==1.81.14

Key Highlights

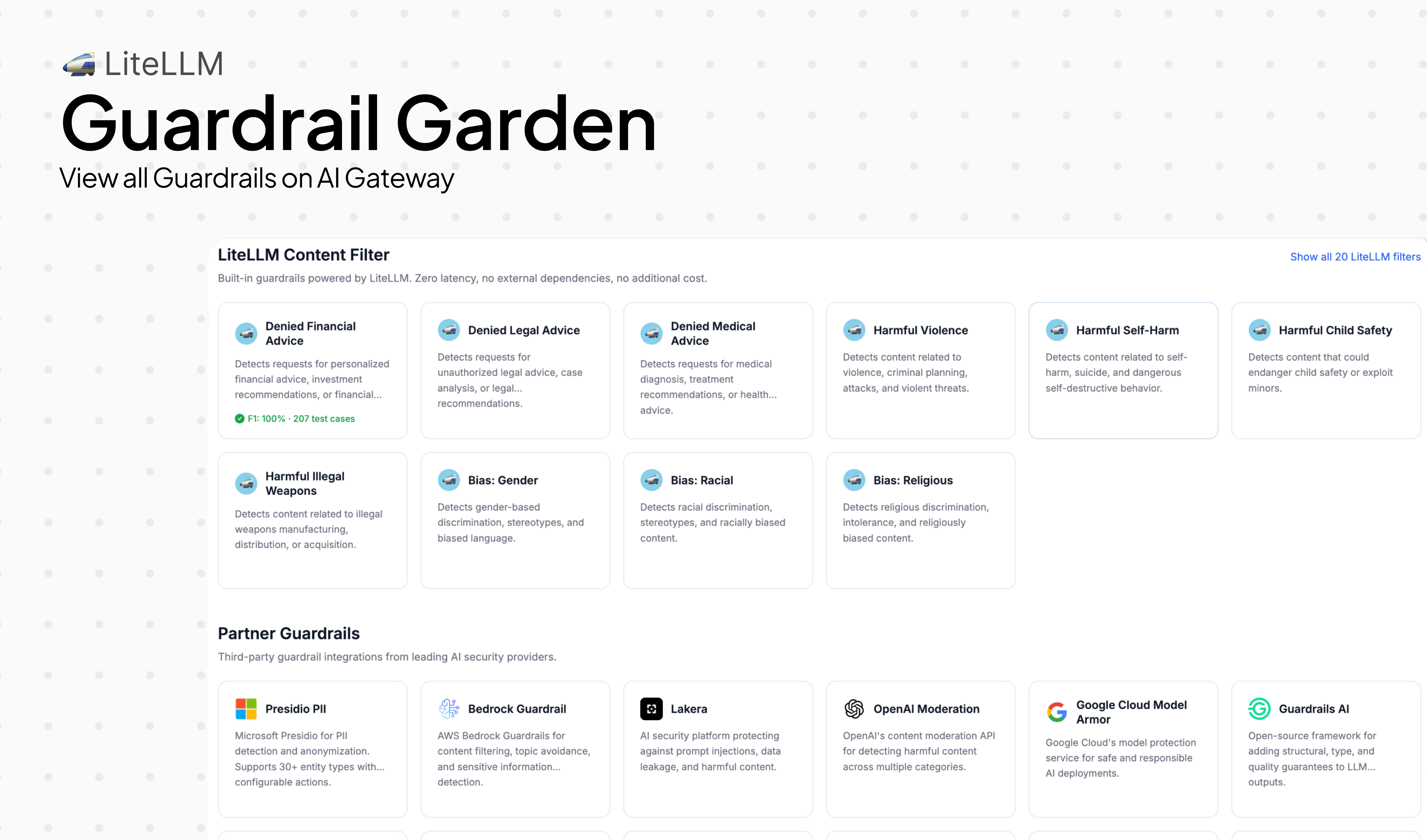

- Guardrail Garden — Browse built-in and partner guardrails by use case — competitor blocking, topic filtering, GDPR, prompt injection, and more. Pick a template, customize it, attach it to a team or key.

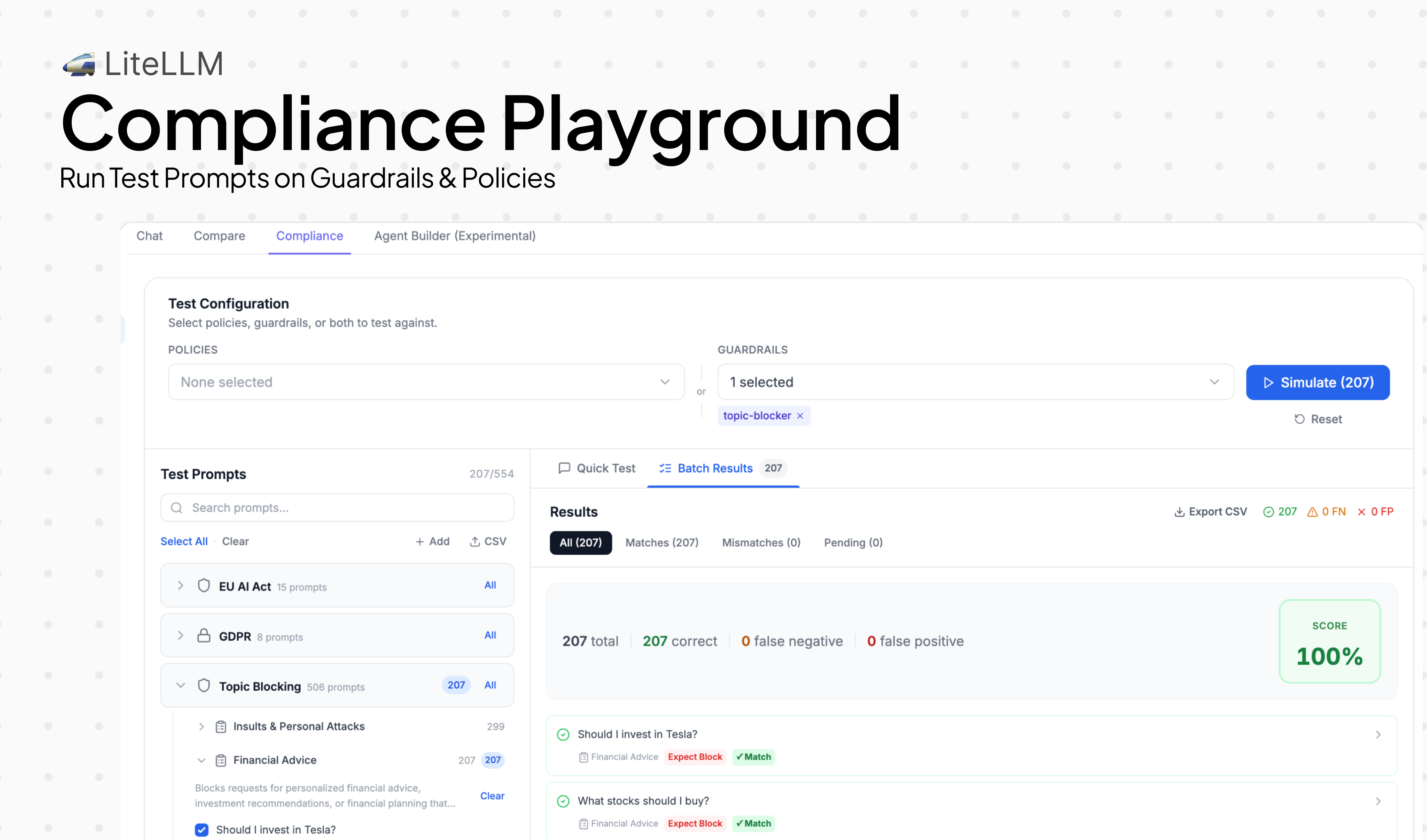

- Compliance Playground — Test any guardrail policy against your own traffic before it goes live. See precision, recall, and false positive rate — so you know how it'll behave in production.

- 3 new zero-cost built-in guardrails — Competitor name blocker, topic blocker, and insults filter — all gateway-level, <0.1ms latency, no external API, configurable per-team or key

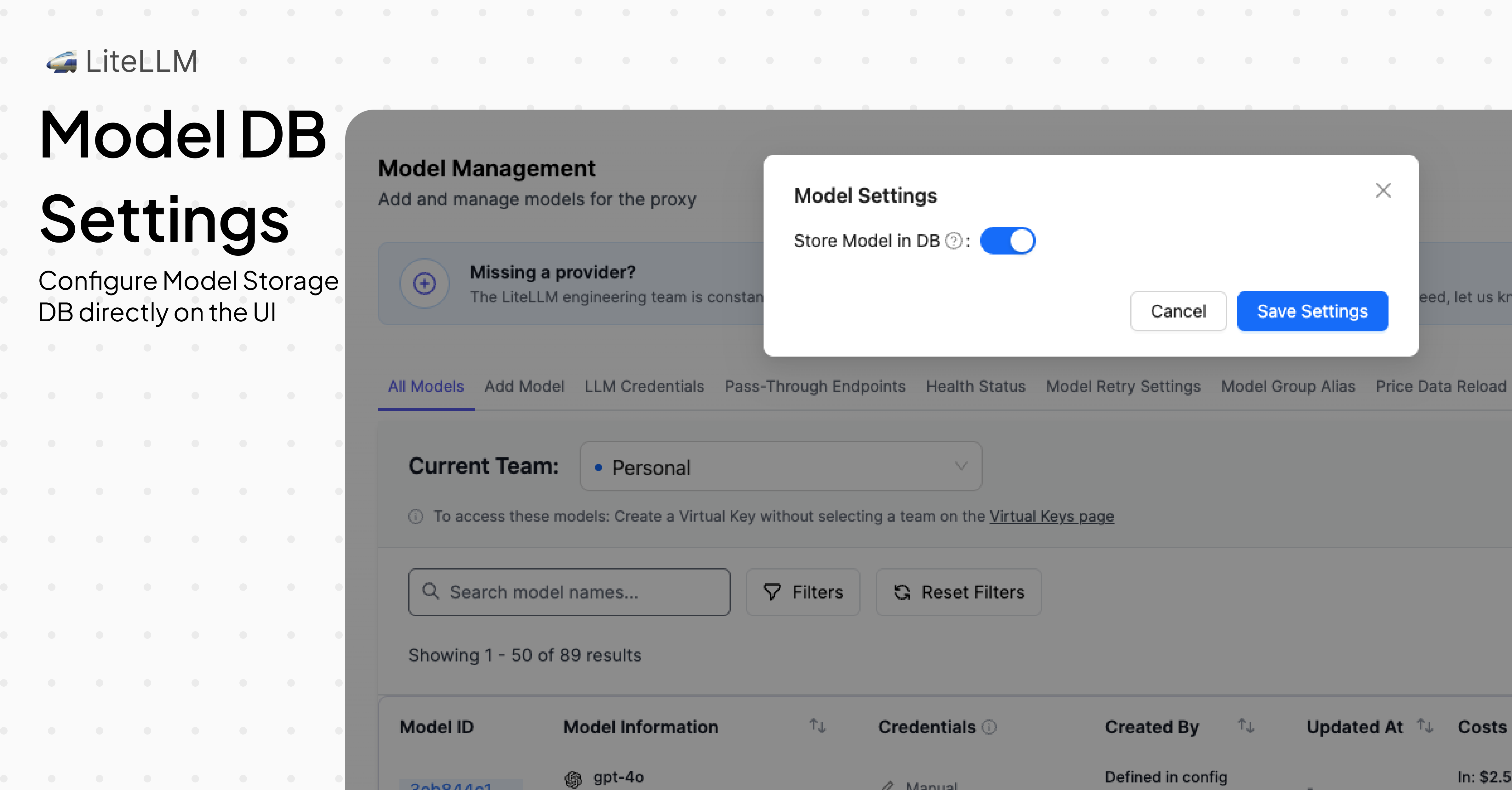

- Store Model in DB Settings via UI - Configure model storage directly in the Admin UI without editing config files or restarting the proxy—perfect for cloud deployments

- Claude Sonnet 4.6 — day 0 — Full support across Anthropic and Vertex AI: reasoning, computer use, prompt caching, 200K context

- 20+ performance optimizations — Faster routing, lower logging overhead, reduced cost-calculator latency, and connection pool fixes — meaningfully less CPU and latency on every request

Guardrail Garden

AI Platform Admins can now browse built-in and partner guardrails from the Guardrail Garden. Guardrails are organized by use case — blocking financial advice, filtering insults, detecting competitor mentions, and more — so you can find the right one and deploy it in a few clicks.

3 New Built-in Guardrails

This release brings 3 new built-in guardrails that run directly on the gateway. This is great for AI Gateway Admins who need low latency, zero cost guardrails for their scenarios.

- Denied Financial Advice — detects requests for personalized financial advice, investment recommendations, or financial planning

- Denied Insults — detects insults, name-calling, and personal attacks directed at the chatbot, staff, or other people

- Competitor Name Blocker — detects mentions of competitor brands in responses

These guardrails are built for production and on our benchmarks had a 100% Recall and Precision.

Store Model in DB Settings via UI

Previously, the store_model_in_db setting could only be configured in proxy_config.yaml under general_settings, requiring a proxy restart to take effect. Now you can enable or disable this setting directly from the Admin UI without any restarts. This is especially useful for cloud deployments where you don't have direct access to config files or want to avoid downtime. Enable store_model_in_db to move model definitions from your YAML into the database—reducing config complexity, improving scalability, and enabling dynamic model management across multiple proxy instances.

Eval results

We benchmarked our new built-in guardrails against labeled datasets before shipping. You can see the results for Denied Financial Advice (207 cases) and Denied Insults (299 cases):

| Guardrail | Precision | Recall | F1 | Latency p50 | Cost/req |

|---|---|---|---|---|---|

| Denied Financial Advice | 100% | 100% | 100% | <0.1ms | $0 |

| Denied Insults | 100% | 100% | 100% | <0.1ms | $0 |

100% precision means zero false positives — no legitimate messages were incorrectly blocked. 100% recall means zero false negatives — every message that should have been blocked was caught.

Compliance Playground

The Compliance Playground lets you test any guardrail against our pre-built eval datasets or your own custom datasets, so you can see precision, recall, and false positive rate before rolling it out to production.

New Providers and Endpoints

New Providers (1 new provider)

| Provider | Supported LiteLLM Endpoints | Description |

|---|---|---|

| IBM watsonx.ai | /rerank | Rerank support for IBM watsonx.ai models |

New LLM API Endpoints (1 new endpoint)

| Endpoint | Method | Description | Documentation |

|---|---|---|---|

/v1/evals | POST/GET | OpenAI-compatible Evals API for model evaluation | Docs |

New Models / Updated Models

New Model Support (13 new models)

| Provider | Model | Context Window | Input ($/1M tokens) | Output ($/1M tokens) | Features |

|---|---|---|---|---|---|

| Anthropic | claude-sonnet-4-6 | 200K | $3.00 | $15.00 | Reasoning, computer use, prompt caching, vision, PDF |

| Vertex AI | vertex_ai/claude-opus-4-6@default | 1M | $5.00 | $25.00 | Reasoning, computer use, prompt caching |

| Google Gemini | gemini/gemini-3.1-pro-preview | 1M | $2.00 | $12.00 | Audio, video, images, PDF |

| Google Gemini | gemini/gemini-3.1-pro-preview-customtools | 1M | $2.00 | $12.00 | Custom tools |

| GitHub Copilot | github_copilot/gpt-5.3-codex | 128K | - | - | Responses API, function calling, vision |

| GitHub Copilot | github_copilot/claude-opus-4.6-fast | 128K | - | - | Chat completions, function calling, vision |

| Mistral | mistral/devstral-small-latest | 256K | $0.10 | $0.30 | Function calling, response schema |

| Mistral | mistral/devstral-latest | 256K | $0.40 | $2.00 | Function calling, response schema |

| Mistral | mistral/devstral-medium-latest | 256K | $0.40 | $2.00 | Function calling, response schema |

| OpenRouter | openrouter/minimax/minimax-m2.5 | 196K | $0.30 | $1.10 | Function calling, reasoning, prompt caching |

| Fireworks AI | fireworks_ai/accounts/fireworks/models/glm-4p7 | - | - | - | Chat completions |

| Fireworks AI | fireworks_ai/accounts/fireworks/models/minimax-m2p1 | - | - | - | Chat completions |

| Fireworks AI | fireworks_ai/accounts/fireworks/models/kimi-k2p5 | - | - | - | Chat completions |

Features

-

- Day 0 support for Claude Sonnet 4.6 with reasoning, computer use, and 200K context - PR #21401

- Add Claude Sonnet 4.6 pricing - PR #21395

- Add day 0 feature support for Claude Sonnet 4.6 (streaming, function calling, vision) - PR #21448

- Add

reasoningeffort and extended thinking support for Sonnet 4.6 - PR #21598 - Fix empty system messages in

translate_system_message- PR #21630 - Sanitize Anthropic messages for multi-turn compatibility - PR #21464

- Map

websearchtool from/v1/messagesto/chat/completions- PR #21465 - Forward

reasoningfield asreasoning_contentin delta streaming - PR #21468 - Add server-side compaction translation from OpenAI to Anthropic format - PR #21555

-

- Native structured outputs API support (

outputConfig.textFormat) - PR #21222 - Support

nova/andnova-2/spec prefixes for custom imported models - PR #21359 - Broaden Nova 2 model detection to support all

nova-2-*variants - PR #21358 - Clamp

thinking.budget_tokensto minimum 1024 - PR #21306 - Fix

parallel_tool_callsmapping for Bedrock Converse - PR #21659

- Native structured outputs API support (

-

- Add

devstral-2512model aliases (devstral-small-latest,devstral-latest,devstral-medium-latest) - PR #21372

- Add

-

- Add native rerank support - PR #21303

-

- Fix usage object in xAI responses - PR #21559

-

- Remove list-to-str transformation that caused incorrect request formatting - PR #21547

-

- Convert thinking blocks to content blocks for multi-turn conversations - PR #21557

-

- Fix Grok output pricing - PR #21329

-

- Fix

au.anthropic.claude-opus-4-6-v1model ID - PR #20731

- Fix

-

General

- Add routing based on reasoning support — skip deployments that don't support reasoning when

thinkingparams are present - PR #21302 - Add

stopas supported param for OpenAI and Azure - PR #21539 - Add

storeand other missing params toOPENAI_CHAT_COMPLETION_PARAMS- PR #21195, PR #21360 - Preserve

provider_specific_fieldsfrom proxy responses - PR #21220 - Add default usage data configuration - PR #21550

- Add routing based on reasoning support — skip deployments that don't support reasoning when

Bug Fixes

-

- Fix Anthropic usage object to match v1/messages spec - PR #21295

-

- Add missing model pricing for

glm-4p7,minimax-m2p1,kimi-k2p5- PR #21642

- Add missing model pricing for

LLM API Endpoints

Features

-

- Add support for OpenAI Evals API - PR #21375

-

General

Bugs

- General

Management Endpoints / UI

Features

-

Access Groups

- Add Access Group Selector to Create and Edit flow for Keys/Teams - PR #21234

-

Virtual Keys

- Fix virtual key grace period from env/UI - PR #20321

- Fix key expiry default duration - PR #21362

- Key Last Active Tracking — see when a key was last used - PR #21545

- Fix

/v1/modelsreturning wildcard instead of expanded models for BYOK team keys - PR #21408 - Return

failed_tokensin delete_verification_tokens response - PR #21609

-

Models + Endpoints

- Add Model Settings Modal to Models & Endpoints page - PR #21516

- Allow

store_model_in_dbto be set via database (not just config) - PR #21511 - Fix

input_cost_per_tokenmasked/hidden in Model Info UI - PR #21723 - Fix credentials for UI-created models in batch file uploads - PR #21502

- Resolve credentials for UI-created models - PR #21502

-

Teams

-

Usage / Spend Logs

-

SSO / Auth

-

Proxy CLI / Master Key

-

Project Management

- Add Project Management APIs for organizing resources - PR #21078

-

UI Improvements

Bugs

- Spend Logs: Fix cost calculation - PR #21152

- Logs: Fix table not updating and pagination issues - PR #21708

- Fix

/get_imageignoringUI_LOGO_PATHwhencached_logo.jpgexists - PR #21637 - Fix duplicate URL in

tagsSpendLogsCallquery string - PR #20909 - Preserve

key_aliasandteam_idmetadata in/user/daily/activity/aggregatedafter key deletion or regeneration - PR #20684 - Uncomment

response_modelinuser_infoendpoint - PR #17430 - Allow

internal_user_viewerto access RAG endpoints; restrict ingest to existing vector stores - PR #21508 - Suppress warning for

litellm-dashboardteam in agent permission handler - PR #21721

AI Integrations

Logging

-

- Add

teamtag to logs, metrics, and cost management - PR #21449

- Add

-

- Improve Langfuse test isolation (multiple stability fixes) - PR #21214

-

General

Guardrails

-

Guardrail Garden

- Launch Guardrail Garden — a marketplace for pre-built guardrails deployable in one click - PR #21732

- Redesign guardrail creation form with vertical stepper UI - PR #21727

- Add guardrail jump link in log detail view - PR #21437

- Guardrail tracing UI: show policy, detection method, and match details - PR #21349

-

AI Policy Templates

- Seven new ready-to-deploy policy templates ship in this release:

- GDPR Art. 32 EU PII Protection - PR #21340

- EU AI Act Article 5 (5 sub-guardrails, with French language support) - PR #21342, PR #21453, PR #21427

- Prompt injection detection - PR #21520

- Aviation and UAE topic filters with tag-based routing - PR #21518

- Airline off-topic restriction - PR #21607

- SQL injection - PR #21806

- AI-powered policy template suggestions with latency overhead estimates - PR #21589, PR #21608, PR #21620

- Seven new ready-to-deploy policy templates ship in this release:

-

Compliance Checker

-

Built-in Guardrails

- Competitor name blocker: blocks by name, handles streaming, supports name variations, and splits pre/post call - PR #21719, PR #21533

- Topic blocker with both keyword and embedding-based implementations - PR #21713

- Insults content filter - PR #21729

- MCP Security guardrail to block unregistered MCP servers - PR #21429

-

- Add configurable fallback to handle generic guardrail endpoint connection failures - PR #21245

-

- Fix Presidio controls configuration - PR #21798

-

- Avoid

KeyErroron missingLAKERA_API_KEYduring initialization - PR #21422

- Avoid

Auto Routing

- Complexity-based auto routing — new router strategy that scores requests across 7 dimensions (token count, code presence, reasoning markers, technical terms, etc.) and routes to the appropriate model tier — no embeddings or API calls required - PR #21789, Docs

Prompt Management

- Prompt Management API

Spend Tracking, Budgets and Rate Limiting

- Fix Bedrock service_tier cost propagation — costs from service-tier responses now correctly flow through to spend tracking - PR #21172

- Fix cost for cached responses — cached responses now correctly log $0 cost instead of re-billing - PR #21816

- Aggregate daily activity endpoint performance — faster queries for

/user/daily/activity/aggregated- PR #21613 - Preserve key_alias and team_id metadata in

/user/daily/activity/aggregatedafter key deletion or regeneration - PR #20684 - Inject Credential Name as Tag for granular usage page filtering by credential - PR #21715

MCP Gateway

- OpenAPI-to-MCP — Convert any OpenAPI spec to an MCP server via API or UI - PR #21575, PR #21662

- MCP User Permissions — Fine-grained permissions for end users on MCP servers - PR #21462

- MCP Security Guardrail — Block calls to unregistered MCP servers - PR #21429

- Fix StreamableHTTPSessionManager — Revert to stateless mode to prevent session state issues - PR #21323

- Fix Bedrock AgentCore Accept header — Add required Accept header for AgentCore MCP server requests - PR #21551

Performance / Loadbalancing / Reliability improvements

Logging & callback overhead

- Move async/sync callback separation from per-request to callback registration time — ~30% speedup for callback-heavy deployments - PR #20354

- Skip Pydantic Usage round-trip in logging payload — reduces serialization overhead per request - PR #21003

- Skip duplicate

get_standard_logging_object_payloadcalls for non-streaming requests - PR #20440 - Reuse

LiteLLM_Paramsobject across the request lifecycle - PR #20593 - Optimize

add_litellm_data_to_requesthot path - PR #20526 - Optimize

model_dump_with_preserved_fields- PR #20882 - Pre-compute OpenAI client init params at module load instead of per-request - PR #20789

- Reduce proxy overhead for large base64 payloads - PR #21594

- Improve streaming proxy throughput by fixing middleware and logging bottlenecks - PR #21501

- Eliminate per-chunk thread spawning in Responses API async streaming - PR #21709

Cost calculation

- Optimize

completion_cost()with early-exit and caching - PR #20448 - Cost calculator: reduce repeated lookups and dict copies - PR #20541

Router & load balancing

- Remove quadratic deployment scan in usage-based routing v2 - PR #21211

- Avoid O(n²) membership scans in team deployment filter - PR #21210

- Avoid O(n) alias scan for non-alias

get_model_listlookups - PR #21136 - Increase default LRU cache size to reduce multi-model cache thrash - PR #21139

- Cache

get_model_access_groups()no-args result on Router - PR #20374 - Deployment affinity routing callback — route to the same deployment for a session - PR #19143

- Session-ID-based routing — use

session_idfor consistent routing within a session - PR #21763

Connection management & reliability

- Fix Redis connection pool reliability — prevent connection exhaustion under load - PR #21717

- Fix Prisma connection self-heal for auth and runtime reconnection (reverted, will be re-introduced with fixes) - PR #21706

- Make

PodLockManager.release_lockatomic compare-and-delete - PR #21226

Database Changes

Schema Updates

| Table | Change Type | Description | PR |

|---|---|---|---|

LiteLLM_DeletedVerificationToken | New Column | Added project_id column | PR #21587 |

LiteLLM_ProjectTable | New Table | Project management for organizing resources | PR #21078 |

LiteLLM_VerificationToken | New Column | Added last_active timestamp for key activity tracking | PR #21545 |

LiteLLM_ManagedVectorStoreTable | Migration | Make vector store migration idempotent | PR #21325 |

Documentation Updates

- Add OpenAI Agents SDK with LiteLLM guide - PR #21311

- Access Groups documentation - PR #21236

- Anthropic beta headers documentation - PR #21320

- Latency overhead troubleshooting guide - PR #21600, PR #21603

- Add rollback safety check guide - PR #21743

- Incident report: vLLM Embeddings broken by encoding_format parameter - PR #21474

- Incident report: Claude Code beta headers - PR #21485

- Mark v1.81.12 as stable - PR #21809

New Contributors

- @mjkam made their first contribution in PR #21306

- @saneroen made their first contribution in PR #21243

- @vincentkoc made their first contribution in PR #21239

- @felixti made their first contribution in PR #19745

- @anttttti made their first contribution in PR #20731

- @ndgigliotti made their first contribution in PR #21222

- @iamadamreed made their first contribution in PR #19912

- @sahukanishka made their first contribution in PR #21220

- @namabile made their first contribution in PR #21195

- @stronk7 made their first contribution in PR #21372

- @ZeroAurora made their first contribution in PR #21547

- @SolitudePy made their first contribution in PR #21497

- @SherifWaly made their first contribution in PR #21557

- @dkindlund made their first contribution in PR #21633

- @cagojeiger made their first contribution in PR #21664